In brief

The Artificial Intelligence Act ("AI Act") was published in the European Union (EU) Official Journal (Regulation (EU) 2024/1689) on 12 July 2024. This landmark legislation establishes a comprehensive regulatory framework for Artificial Intelligence (AI), setting stringent rules and guidelines to ensure AI systems are safe, transparent, and respect fundamental rights, while promoting innovation. Here's what to expect:

The EU AI Act will enter into force on 1 August 2024;

The AI Act impacts all users of AI systems, not just developers and distributors;

Every organisation using AI needs to ensure AI literacy and to perform a risk assessment on their applications;

PwC provides a comprehensive set of services that support you in meeting your legal obligations.

In detail

AI Definition

According to the definition in Article 3(1) of the AI Act, an AI system is a machine-based system designed to operate autonomously and adaptively, generating outputs such as predictions, content, recommendations, or decisions from the input it receives. Recital 12 clarifies that AI systems do not include rule-based systems solely operated by humans, with a defining feature being their ability to infer through machine learning or logic- and knowledge-based approaches, enabling learning, reasoning, and modeling beyond basic data processing.

The impact of AI Act on an organisation differs depending on the role

The legal framework will apply to both public and private actors inside and outside the EU as long as the AI system is placed on the Union market or the output is used in the EU. It can concern both providers and deployers of high-risk AI systems. It does not apply to private, non-professional uses. In general, the AI Act distinguishes between the following roles:

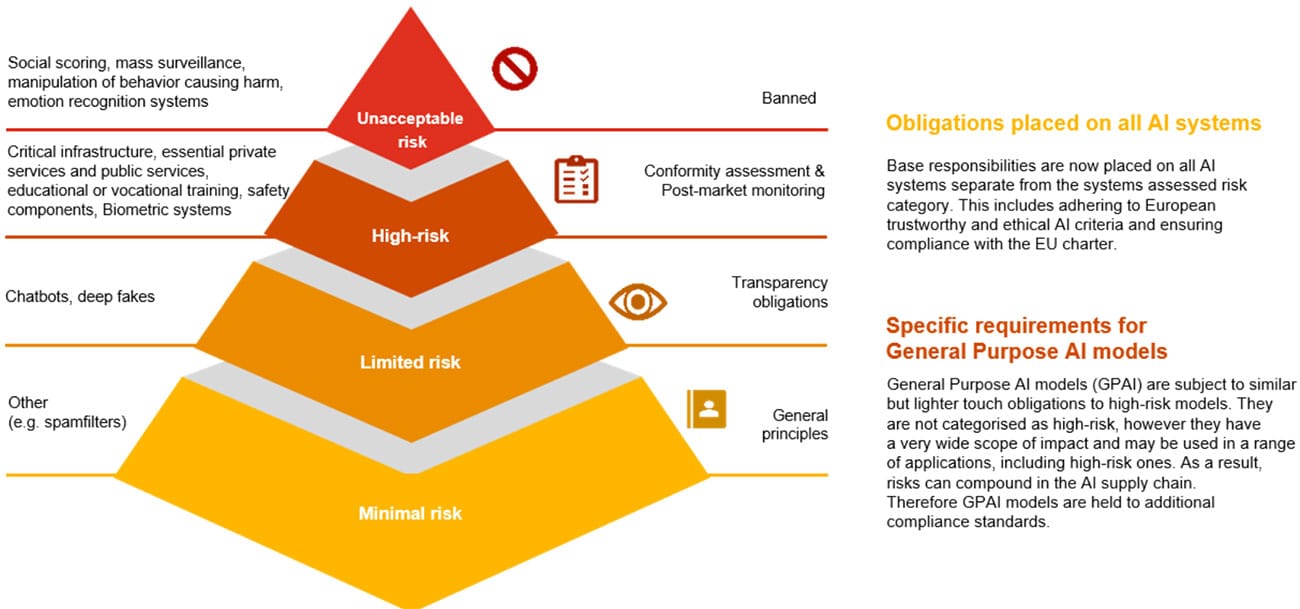

A risk-based approach towards AI

The AI Act adopts a risk-based approach to regulating AI systems. The stringency of the applicable rules depends on the potential severity of harm to fundamental rights and the likelihood of that harm occurring. The risk categories are:

Deployers’ Obligations

Deployers of high-risk AI systems must implement technical and organisational measures, provide human oversight, monitor the systems, ensure the input data is relevant and representative, maintain logs, and conduct data protection impact assessments. For instance, if AI is used in an employment context, employees must be informed of its use.

General Purpose AI models (GPAI)

Providers and deployers of AI systems using GPAI models are not subject to Chapter V of the AI Act but must follow its general rules. GPAI models and systems are treated separately, as highlighted on the graph above. GPAI providers must fulfill several obligations, including creating technical documentation, establishing a copyright policy, and publishing data on training content.

What is the timeline?

The provisions of the AI Act are expected to begin to apply from 1 August 2024 with certain exceptions as highlighted below:

The majority of the AI Act's regulations will take full effect on 2 August 2026. However, AI systems that present an unacceptable risk as outlined in Chapter II will be prohibited as from 2 February 2025. This same date, 2 February 2025, will also mark the enforcement of AI literacy requirements detailed in Chapter I.

How can PwC help?

Navigating compliance with the AI Act will present significant strategic and governance challenges which must be met within the relevant timeframes. To ensure compliance and mitigate risks, PwC Luxembourg offers a wide range of services. Our specialists are equipped to explain the implications of AI Regulation, spot deficiencies, devise appropriate solutions, and implement them. We offer assistance with AI strategy, regulatory compliance and risk management, and technology across crucial aspects. When implementing AI/Machine Learning (ML) technologies, the AI Act isn't the sole regulatory framework to examine. DORA, GDPR, ESG, liability, and outsourcing regulations also play a critical role and must be taken into account.

Reach out to us for a first assessment regarding EU AI Act compliance.

Contact us

Sébastien Schmitt

Advisory Partner, Regulatory, Risk and Compliance, PwC Luxembourg

Tel: +352 621 334 242

Ryan Davis

Advisory Partner, Risk & Compliance Advisory Services, PwC Luxembourg

Tel: +352 621 333 580

Andreas Braun

Advisory Managing Director, Data Science & AI Team Lead, PwC Luxembourg

Tel: +352 62133 23 66

Tomasz Wolowski

Senior Manager, Regulatory & Compliance Advisory Services, PwC Luxembourg

Tel: +352 621 332 243